Introducing Google ‘Perspective’ integrations, and better sleep for communicators

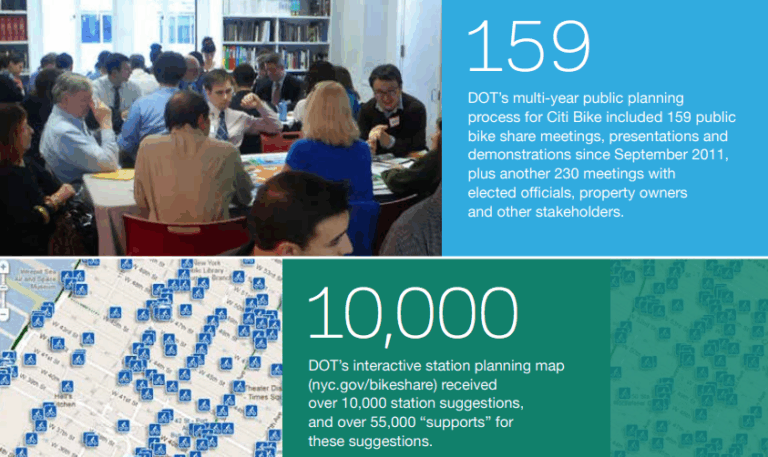

Despite the proliferation of online discussion forums and social media, many agencies have struggled to embrace online dialog as a functional part of the public process. Their hesitation is simple – curating these spaces has historically meant significant investments of time and worry over what might be posted.

It’s not a problem limited to public organizations. Organizations like the New York Times have grappled with the challenge of managing online comments. Today they employ a team of 14 moderators to review approximately 11,000 comments each day.

Whether you’re a publisher or public agency, when faced with labor-intensive moderation or opening yourself up to risks inherent in unmoderated spaces, the difficult decision often comes down to providing fewer opportunities for the public to engage.

Google ‘Perspective’ Enters the Picture

Recognizing this challenge as a great opportunity for technology to aid human moderators, Google’s Jigsaw group launched ‘Perspective’ in 2017.

Perspective uses machine learning models to score the perceived impact a comment might have on a conversation. The model was trained using hundreds of thousands of human-moderated comments to identify patterns that make a comment “toxic”. For the purposes of civil dialog, “toxic” means “a rude, disrespectful, or unreasonable comment that is likely to make you leave a discussion.”

The Perspective API has enabled organizations like the New York Times, Wikipedia, The Guardian, and The Economist to instantly score comments on a scale of 0 to 100 for the following factors:

- Inflammatory: Intending to provoke or inflame

- Obscene: Obscene or vulgar language such as cursing

- Spam: Irrelevant and unsolicited commercial content

- Incoherent: Difficult to understand, nonsensical

- Unsubstantial: Trivial or short comments

- Attack on author

- Attack on commenter

Whereas prior moderation tools have been mostly limited to detecting profanity, Perspective facilitates a more nuanced, quantified approach.

According to NYT editors:

The Times hopes that the project will expand viewpoints, provide a safe platform for diverse communities to have diverse discussions and allow readers’ voices to be an integral part of nearly every piece of reporting. The new technology will also free up Times moderators to engage in deeper interactions with readers.

Those outcomes are great for news, but could have an even more profound implication for public agencies. Freeing up staff capacity while providing increased access for diverse voices is a big win-win.

Perspective Applied to the Public Sector

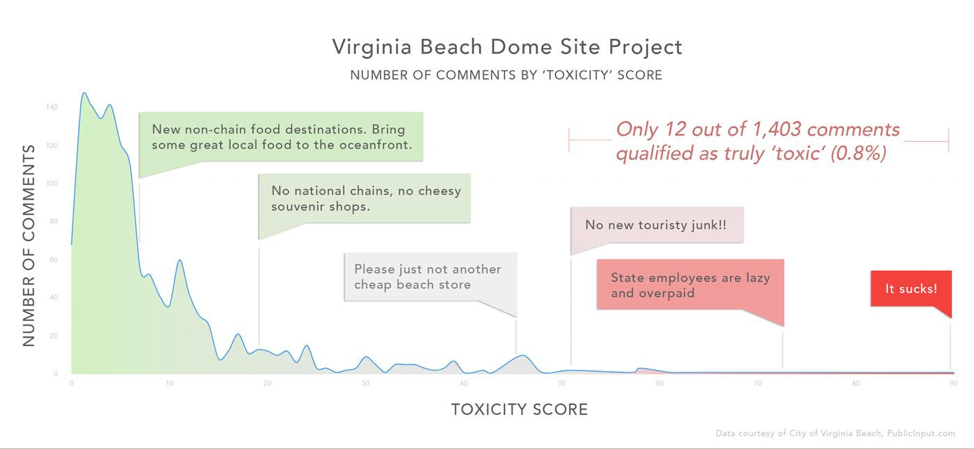

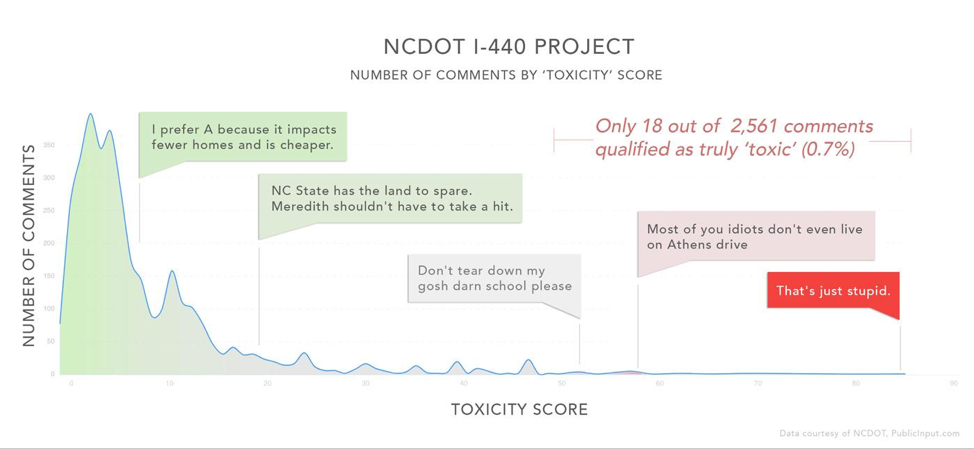

To glimpse how tools like this might be applied, we analyzed engagement data from the City of Virginia Beach and the North Carolina Department of Transportation.

Both agencies recently conducted outreach on high-profile projects and received thousands of public comments – 1,403 comments on Virginia Beach’s Dome Site project and 2,561 comments on NCDOT’s I-440 Walnut to Wade project.

To assess how Perspective could have supported their work, we retrospectively ran comment data from PublicInput.com’s online engagement platform through the Perspective API to assess what would have been deemed ‘toxic’, and how that would have affected staff moderation efforts.

Bad actors represent less than 1%

While one might assume that online ‘trolls’ constitute a large portion of participants, in the samples we analyzed, less than 1% of total comments received were considered toxic.

In the case of Virginia Beach, only 12 of 1,403 comments had a toxicity score of 50 or higher. That pattern also held true on NCDOT’s I-440 project, where 18 of 2,561 were found to be potentially toxic.

The infographics below show comment volume by toxicity, where comments to the left are considered generally healthy dialog, and those to the right are potentially unhealthy. Specific examples are included at various toxicity levels to provide context.

These charts point to a decidedly more optimistic future for online public dialog, where the majority of participants adhere to widely-accepted norms of public conversation.

Reclaiming staff time from the trolls

In the case of NCDOT’s I-440 project, an average human being reading at 250 words per minute would need 628 minutes to manually moderate every comment.

With a tool like Perspective, manual moderation time would have been reduced to about 15 minutes, even if staff chose to review anything with a toxicity score over 25.

Beyond staff time: encouraging more productive language

While immediate wins might be found in freeing up staff time, a potential second-wave impact is hidden one layer deeper. If we know why a comment is toxic, why not nudge a participant towards more productive language?

To explore this, we’ve created a beta implementation of real-time user comment feedback for anyone to experiment with:

Feel free to try it out, and we’d love to hear your thoughts on the potential for technologies like Perspective in your work!