We are all about public engagement at PublicInput.com, but more importantly effective public engagement. An important step of this is lowering the barrier to getting people’s input. So the question was posed, “are we getting the most out of our online surveys?”

As the Lead Data Scientist at PublicInput…I got excited. We hit the data and here are some of the high level points we came up with.

Our Test Case: ReachNC

ReachNC, a North Carolina statewide Initiative, has used our platform to aid their engagement. We’ve worked with Reach to push out surveys on WRAL and EducationNC, sent out surveys in email blasts to subscribers, and done widespread targeted Facebook outreach.

In all, Reach’s content and questions have been seen by over 2 million people around the state of North Carolina through PublicInput’s platform. They have received hundreds of thousands of votes and comments. This sheer quantity of data made them a good choice for a test case on what makes an effective online survey.

After some number crunching, we’ve compiled ways to improve response rates using the lessons we’ve learned with Reach. In this post, I’ll cover two in particular: Survey length and question type.

Survey Length

One of the major questions when making a survey online is how much can you ask a random respondent before they lose attention.

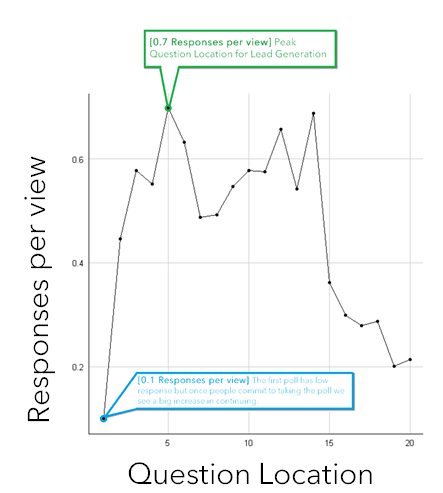

This chart shows us the responses per view we expect as we move deeper into the survey. Question 1 has a ‘response per view’ rate of 0.1. This means that it would take 10 views to get one response. While 10% may initially sound small, keep in mind that this is across millions of views, many of which were online advertisements. That’s actually about 30X higher engagement than average display ad.

Once someone has answered that first question things look a lot better. Question five gets as high as 0.7 responses per view. This is a great location to enter a lead question as we expect a drop off in responses to occur soon after.

For ReachNC, we are often looking to generate ‘leads’ where we ask for emails and phone numbers so we can continue the conversation. Creating a balance between gathering information and leads can be a challenge. Put the lead question too late and nobody makes it there, put it too early and you don’t gather enough information. This analysis shows us that 5 questions is a good limit to lead generation polling.

ReachNC also performs longer polls that are sent to groups that we expect will finish the entire survey. This is what leads to the uptick we see after question 8. This is less relevant in the discussion of online surveys aimed for any citizen.

Overall, if your goal is attracting the complete participation from the largest possible audience, the optimum survey length is 5–6 questions.

Longer surveys are fine, with the caveat that the most important questions should be placed toward the beginning of your survey.

Question Type

We have over 10 different types of questions that customers use to ask survey questions. The five most common question types that ReachNC uses are:

-

- Single Choice Poll: Multiple Choice, select one

- Multiple Choice Poll: Multiple Choice, select all that apply

- Q and A: Multiple Choice question with a correct answer

- Comment: Write in a comment

- Short Comment: One Liner comment

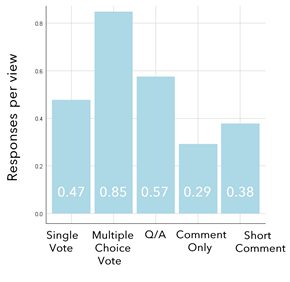

Similar to the survey length analysis above, we looked at each of these areas for responses per view.

It is important to note that the number of data points for Multiple Choice Vote is limited — so the 0.85 number is somewhat inflated. Nonetheless, this follows closely to what we intuitively thought… asking the user to share free-form text generally creates the most drop-off.

Comment questions can yield valuable information, but they significantly slow user momentum on a survey. Using them wisely (definitely not as the opening question) can get you the value inherent in comment responses while still keeping your conversion rate high.

The most used questions by ReachNC, and many of our customers, are ‘Single Choice’ and ‘Comment’. We almost always start off with a Single Choice question as a low barrier way to get people to start taking the survey.

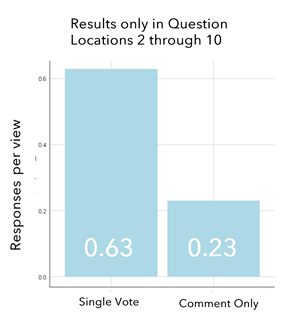

When looking at drop-off later in the survey (questions 2–10), conversion when using the single-vote question increases significantly, while ‘Comment’ questions question type conversion holds steady.

Keeping this in mind, it’s important to recognize the right point to ask for open-ended comments from your online participants.

We recommend placing open-ended questions only after your participants have answered the most critical single-choice questions for your survey.

This is a quick snapshot into one of the many ways in which we are improving the process through our Public Engagement Management System. We hope this high level information can help you create more effective surveys and enhance your public engagement and we would love to help you improve your online engagement!

Thanks,

Bryan Noreen

Lead Data Scientist